Why are people excited about the GPT-5 router?

Contra semianalysis on GPT5 monetization

This was originally going to be a substack note but it got a bit long. So everyone gets an email instead!

A few folks sent me the semianalysis piece on GPT5 from last week. If you haven’t read it, here’s the short version.

The GPT5 you interact with on OAI’s website is actually three models in a trenchcoat. There is a ‘router’ model that takes a user input and decides dynamically whether to send it to gpt-5-with-thinking or gpt-5-without-thinking. From OpenAI:

GPT‑5 is a unified system with a smart, efficient model that answers most questions, a deeper reasoning model (GPT‑5 thinking) for harder problems, and a real‑time router that quickly decides which to use based on conversation type, complexity, tool needs, and your explicit intent (for example, if you say “think hard about this” in the prompt)

The authors at semianalysis extrapolate this router technology as the harbinger of all sorts of consumer sales integration. They write:

The Router release can now understand the intent of the user’s queries, and importantly, can decide how to respond. It only takes one additional step to decide whether the query is economically monetizable or not. Today we will make our case for how ChatGPT’s monetized free end state could look like an Agentic super-app for the consumer. This is only possible because of routing.

Before the router, there was no way for a query to be distinguished, and after the router, the first low-value query could be routed to a GPT5 mini model that can answer with 0 tool calls and no reasoning. This likely means serving this user is approaching the cost of a search query.

So now let’s bring in the higher value query, the DUI Lawyer question. As you may know, this is an extremely valuable question. Today on search, this is one of the higher cost per click keywords, and it is plastered with ads. In a world of dynamic supply, ChatGPT can not only answer this question, it could realize this is a very valuable question and answer this question at the level of a human. It could throw $50 dollars of compute if there is a belief of high conversion, because that transaction is worth $1000s of dollars.

Sorry, what? Am I missing something? This tech has existed for a really long time, why is the ‘router’ anything new?!

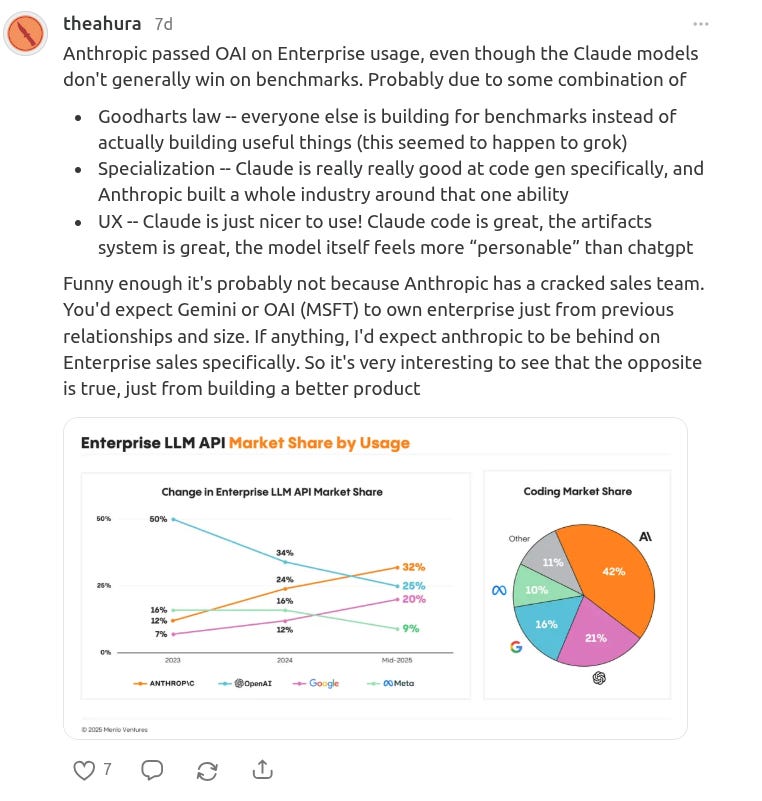

I totally agree that OpenAI is trying to figure out how to best monetize its massive unpaying user base. They sort of have to. The same day that semianalysis published their piece, I pointed out that Anthropic was winning on monetizable users:

The enterprise users — the people who pay for credits using API keys or whatever — are the only people who can be monetized right now! Anthropic's laser-focus on the coding space is a savvy business move, because programmers will pay for this on a per-use basis in a way that consumers just will not. Even though Anthropic has a fraction of the users OAI has, they have a significant chunk of the paying users, which obviously matters more if you are a business who cares about being a business.

So, yes, OpenAI needs to monetize their consumer-users and this is also (to me, at least) not very surprising. In this I agree fully with the folks over at semianalysis.

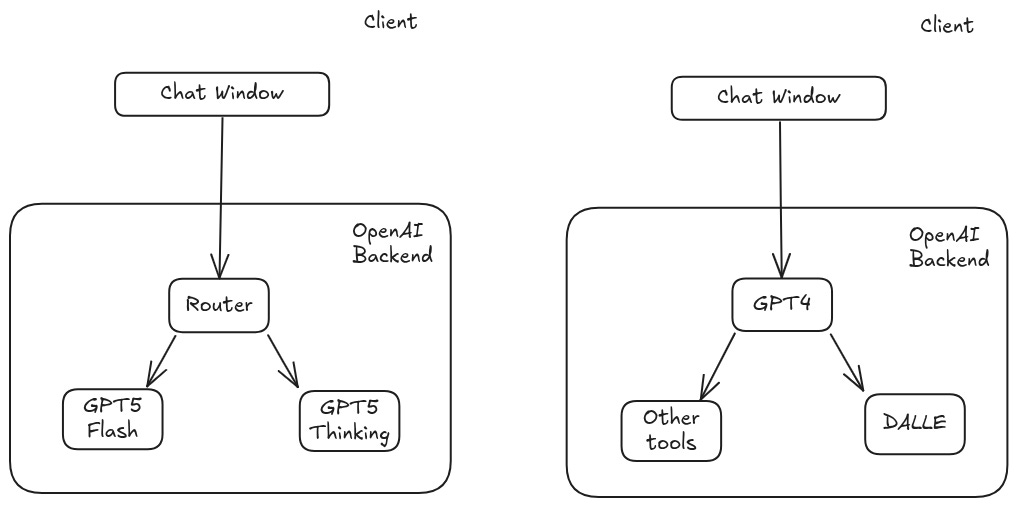

Where they lose me is this fascination with the router. I just don't see why the router is the linchpin for this whole system. The router is a trivial extension of LLM tech. Existing LLMs have been able to do this sort of thing for years now. This isn't even the first time a 'routing model' has appeared within ChatGPT! When you asked older versions of ChatGPT for an image, it would 'route' to a different image generation model (DALLE) to actually produce the image for you.

Now, I think that was done with a tool call being made by whichever text model that was being used. But this to me is a distinction without a difference — a 'router' that outputs a tool call to another LLM is entirely indistinguishable from a 'router' that is a smaller model that exists solely to decide whether to get a response from model A or model B.

Going back a bit further, 2020-2023 saw the rise of many ‘router-like’ systems as hobbyists and engineers would string together different types of LLMs, using the output of one LLM to modulate or the results of another LLM. This is around the time that frameworks like LangChain became really popular. The big industry-wide innovations from 2024 onward involve the LLM providers creating ‘omni models’ that can do everything without having to stitch together long chains outside of embedding space, essentially obviating the need for orchestrating a lot of different LLMs together. ChatGPT 4 was two models (DALLE + GPT3.5) in a trench coat; by comparison, ChatGPT 4o was a giant, all-in-one multimodal model.1

So I find myself really confused why the semianalysis authors think the new GPT5 router is. well, new. To me, this is just a return to the previous world, where many different models were used to create one coherent UX. The GPT-5 router does not not strike me as meaningfully different from any of the (very powerful) tool calling systems that have existed in major LLM releases since 2023, or any of the previous tooling that existed to route queries to specific ‘expert’ models in standard MoE architectures.

Please let me know if there’s something obvious I am missing.

The `o` in GPT-4o stands for ‘omni’, reflecting the uniqueness of having an all-in-one multimodal model.