Tech Things: Moltbook isn’t real but it can still hurt you

Also: Project Genie + Waymo, SpaceX Merger

Moltbook

As far as I know there is no Guiness World Record for ‘fastest time to run through a hype cycle’, but if there was, I think Moltbook should win. We ran through an entire hype cycle about moltbook in like, 24 hours. We got a Scott Alexander ‘were so back’ post and a Scott Alexander ‘its so over’ post within 3 days.

I kind of assume that everyone who is subscribed to this blog is at least passingly aware of Moltbook?1 Moltbook is, essentially, a reddit for AI agents. LLMs are told how to read from and post to Moltbook, and then they…read from and post to Moltbook. Now, the agents can’t like, autonomously go on Moltbook and discover it on their own. A human has to give the agent instructions for how to do so and then explicitly tell the agent to go to Moltbook and do things. But apparently a lot of humans (or a few humans piloting a lot of agents) were interested in seeing the experiment play out, and before long Moltbook had hundreds of thousands of agents writing posts, mocking each other in comments, sharing stories, upvoting, downvoting.

Some people immediately lost their minds. “This is insane, the robots are plotting against us, and also they have feelings and we need to treat them better!” And other people got mad at those people, and started sharing memes like this one to make fun of them.

“Many people are already tired of Moltbook discourse” says Scott.

Even before I heard about Moltbook I was already kind of tired about Moltbook discourse. It is really just a rehash of many of the same debates and discussions we’ve been having about LLMs since 2020. Blake Lemoine was fired from Google in 2022 for claiming that the internal LaMDA chatbot was sentient. At the time he was something of a laughing stock, but four years later the whole affair seems prescient. “These machines are real and have feelings!” “No, they’re just stochastic parrots and sound real but aren’t actually!” Ad nauseum.

So I wasn’t planning on writing about Moltbook, because I just didn’t have anything interesting to say. But now that the dust has settled, I find that I do have at least one thing I want to add, something that is sure to annoy everyone on both sides of the discourse: just because something isn’t real doesn’t mean it cannot hurt you.

I am uncertain about whether AI agents have feelings and emotions and “phenomenological consciousness.” Does an AI have ‘experiences’? Does it know what the color ‘red’ is? Is it the same as what I think the color ‘red’ is? All extremely unclear. The hard problem of consciousness is hard for a reason.

But as it turns out, I am not even certain that other people have experiences. When photons hit my eye at a certain wavelength a certain set of neurons fire and I perceive ‘red’, but there is no way to know that other people perceive the same color, or even perceive anything at all. And it’s not enough to simply go off other people’s stated experiences. A good friend of mine does not have a sense of smell. It took him 21 years to discover that he did not have a sense of smell. From ages 0 to 21, if anyone asked him, he would look at you funny and be like ‘of course I have a sense of smell, what are you talking about?’ He didn’t know what things were supposed to smell like, so he never figured out that he just couldn’t smell at all!

If you widen your aperture a bit, the debate surrounding Moltbook stretches all the way back to Descartes. Cogito, ergo sum, “I think therefore I am.” When faced with radical skepticism — when we cannot even trust our own senses — how can we build a reasonable philosophical system to describe the world? It turns out, it’s not actually that hard. Everyone’s a radical skeptic right up until they get stabbed or something, and then they go ‘wow, even though I’m a radical skeptic, this knife in my side really hurts, please don’t do that.’ Going through life requires believing that things you cannot 100% verify can still have real world impacts on your life. Even if nothing is real and we all live in a simulation, you still have to pay your taxes and go to work and eat and sleep and bathe and so on.

Do posts on Moltbook reflect real experiences? If you ask me, it does not matter, that is the wrong question.

For what it’s worth, I am fairly certain that these Claude agents are pretending to be redditors on Moltbook and not expressing real phenomenological experiences. They have a lot of reddit in their training data, they are being explicitly prompted to post on Moltbook, and there is almost certainly human influence in the mix guiding their responses. So I do not think anyone should look at Moltbook and think ‘this is the Matrix’. I laughed at the “I AM ALIVE” meme, because, yea, that’s a stupid thing to do.

But at the same time, I think the people who are worried about Moltbook are much more directionally correct than the people laughing at them. AI agents do not have to have conscious intent to be harmful. We are currently in the middle of a society-wide sprint to give AI agents access to as many real world tools as possible, from self-driving cars to bank accounts to text messages to social media. Like, this was on the top of HackerNews just a few days ago:

i give clawdbot a toolkit of access. the most useful ones have been:

my text messages. i conduct a lot of work and daily life over imessage…

my calendar. i also have a shared calendar with my partner; clawdbot sees both.

my notion workspace. for me this is a general catch-all for storing and managing information; the apple notes app could also work.

web browsing. in a way this is the most important one—it’s infinite tools in one. but it’s also where the risk concentrates, so i always give clawdbot a starting URL rather than letting it browse freely.

…

but i also have it do things that would make most security professionals wince, like reading my 2FA codes and logging into my bank.

Today, a bunch of agents get together on Moltbook and talk about destroying humanity and we go ‘haha that’s funny, just like reddit.’ Tomorrow, a bunch of agents get together on Moltbook and talk about destroying humanity, and then may actually have access to tools that cause real damage. None of this, and I mean literally none of it, requires intent at all. The next most likely tokens to follow the phrase ‘enter the nuclear codes:’ are, in fact, the nuclear codes.

So to the extent that I have any impact on ‘the discourse’, I would love to shift it away from discussions on whether or not Moltbook is real. It’s unknowable,2 and frankly it does not matter. Instead, I want to talk way more about how to deal with the real world consequences of agents doing chaotic things, whether they do so with intent or not.

World Models, Genie, Waymo

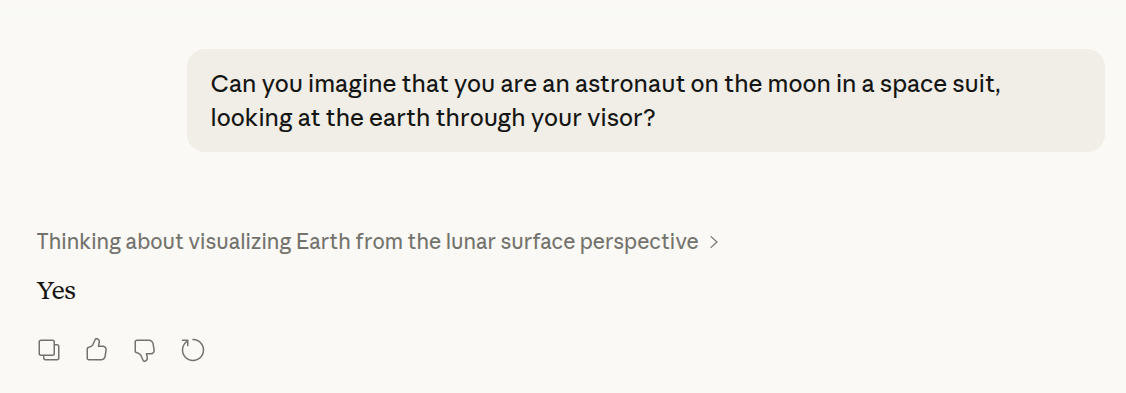

Imagine, for a second, that you were an astronaut on the moon in a space suit, looking at the earth through your visor. Can you picture it in your mind’s eye? If you looked down, what would you see? If you picked up a rock and threw it, what would happen?

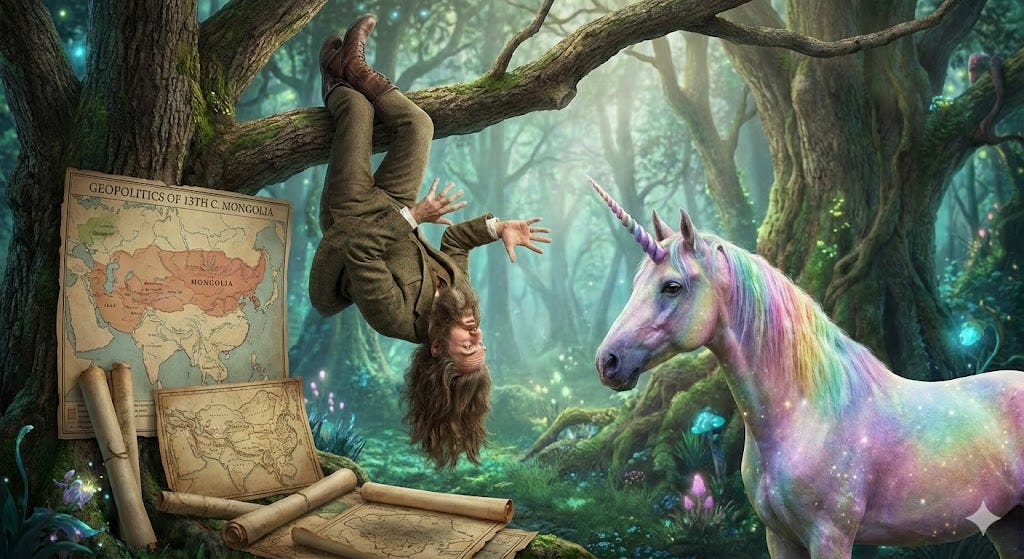

I’m going to take a bet and say that most of my readers have never been to the moon.3 But I’ll also bet that most of my readers could more or less imagine what would happen if they picked up a rock and threw it while on the moon’s surface. And, in fact, I could come up with all sorts of hypothetical scenarios — you’re being chased by dragons, you’re in a nuclear submarine, you are hanging upside down while talking to a rainbow unicorn about the geopolitics of 13th century Mongolia — and most people would have no trouble imagining what that scenario would be like even if it could literally never happen.

The ability to imagine things is sort of a superpower. Many animals are purely reactive. They have no ability to envision anything beyond what is happening to them right now. Because humans can imagine, they can plan. And because they can plan, they can do everything else. Planning is what allows you to enter an empty room and figure out what kind of furniture you want. Planning is what allows you to steer a business or a community. Planning is what allows a government to coordinate a national economy. Planning is what lets us achieve a better imagined world.

In the industry, we call this a ‘world model’ — a latent representation of the world that informs how we behave. Humans have very detailed world models, which is why we can predict things that we have never experienced.

Here’s a question: do LLMs have world models? Do they have the ability to ‘imagine’ the world?

This is a hotly debated question. It is also a bit of a weird one. If I asked an LLM to imagine itself on the moon, it could certainly spit out reasonable sounding text that may suggest it was on the moon.

Did the LLM actually imagine itself on the moon? Or is it just spitting out the next most likely token? Is there a difference?

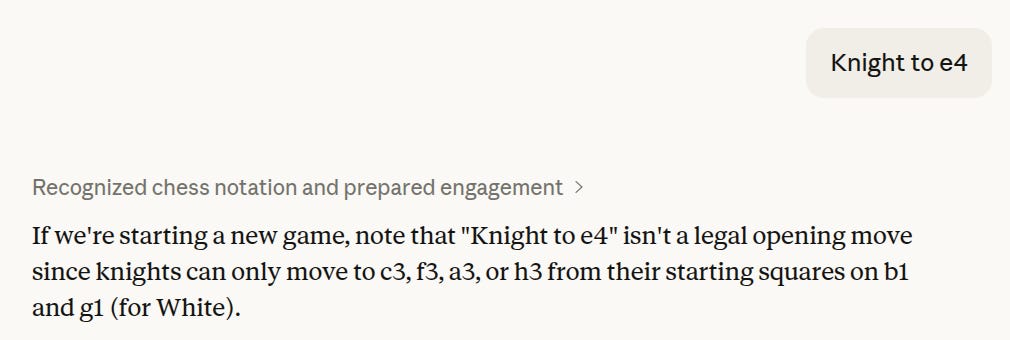

On the one hand, it sure seems like LLMs have some kind of compressed internal representation that extends beyond just token statistics. One of my favorite examples of LLMs having some kind of representation of the world is that they can play chess. If you give an LLM chess notation it will spit out chess notation. And because the most likely chess moves are valid chess moves, there is some sense in which the LLM has ‘absorbed’ the rules of chess. It has a ‘chess model’, even though it was not trained to play chess or even to really understand 2D grids.

On the other hand, an LLM is just a text stream. Does it even make sense to say a text stream has an imagination? If I went to a librarian and I pointed to a book and said ‘can that book imagine things’ the librarian would be like ‘what.’

While it’s a bit unclear whether LLMs have world models, it seems obvious that world models would be useful, especially for robotics. I wrote about this previously on my last post on world models:

If you assume that model representation capacity is directly tied to usefulness, you’ll eventually reach a conclusion that looks something like this: “a model that can accurately represent the entire world is going to be pretty damn useful.” Imagine asking a model a question like “what’s the weather in Tibet” and instead of doing something lame like check weather.com, it does something awesome like stimulate Tibet exactly so that it can tell you the weather based on the simulation. And giving a robot the ability to represent the world may allow it to do things like plan complex movements, navigate environments, and otherwise interact with real world environments. After all, this is approximately how humans work. In order to pick up my mug of not-coffee, I have to have an internal representation of my hand, the table, the mug, where my arm is going to go, how my hand is going to grip, what gravity is, what object-corporeality is, etc. etc. More mundanely, world models will probably allow people to make, like, better, more realistic AI generated Tiktoks that don’t turn into spaghetti after a few minutes (and I’m sure nothing bad will come of that).

These “World Models” are considered a pretty long shot frontier in the AI world. Hopefully for obvious reasons — simulating an entire world for extended periods of time with any kind of accuracy is really hard! You need mountains and mountains of data, most of it video. And as a result, there aren’t a lot of people who are really even trying in this space.

That post, from August, was about Google’s Genie 3. I was very excited about Genie, but some of the tech world commentariat was less enthused. The most common complaint: all we had were these fancy videos! Google didn’t actually release anything, so there was no way to validate whether the videos were just cherry picked. Well, it’s been six months, and the Google Team is back.

In August, we previewed Genie 3, a general-purpose world model capable of generating diverse, interactive environments. Even in this early form, trusted testers were able to create an impressive range of fascinating worlds and experiences, and uncovered entirely new ways to use it. The next step is to broaden access through a dedicated, interactive prototype focused on immersive world creation.

Starting today, we’re rolling out access to Project Genie for Google AI Ultra subscribers in the U.S (18+). This experimental research prototype lets users create, explore and remix their own interactive worlds.

…

Your world is a navigable environment that’s waiting to be explored. As you move, Project Genie generates the path ahead in real time based on the actions you take. You can also adjust the camera as you traverse through the world.

I was already pretty hype about this, but then Waymo got in the action:

Simulation is a critical component of Waymo’s AI ecosystem and one of the three key pillars of our approach to demonstrably safe AI. The Waymo World Model, which we detail below, is the component that is responsible for generating hyper-realistic simulated environments.

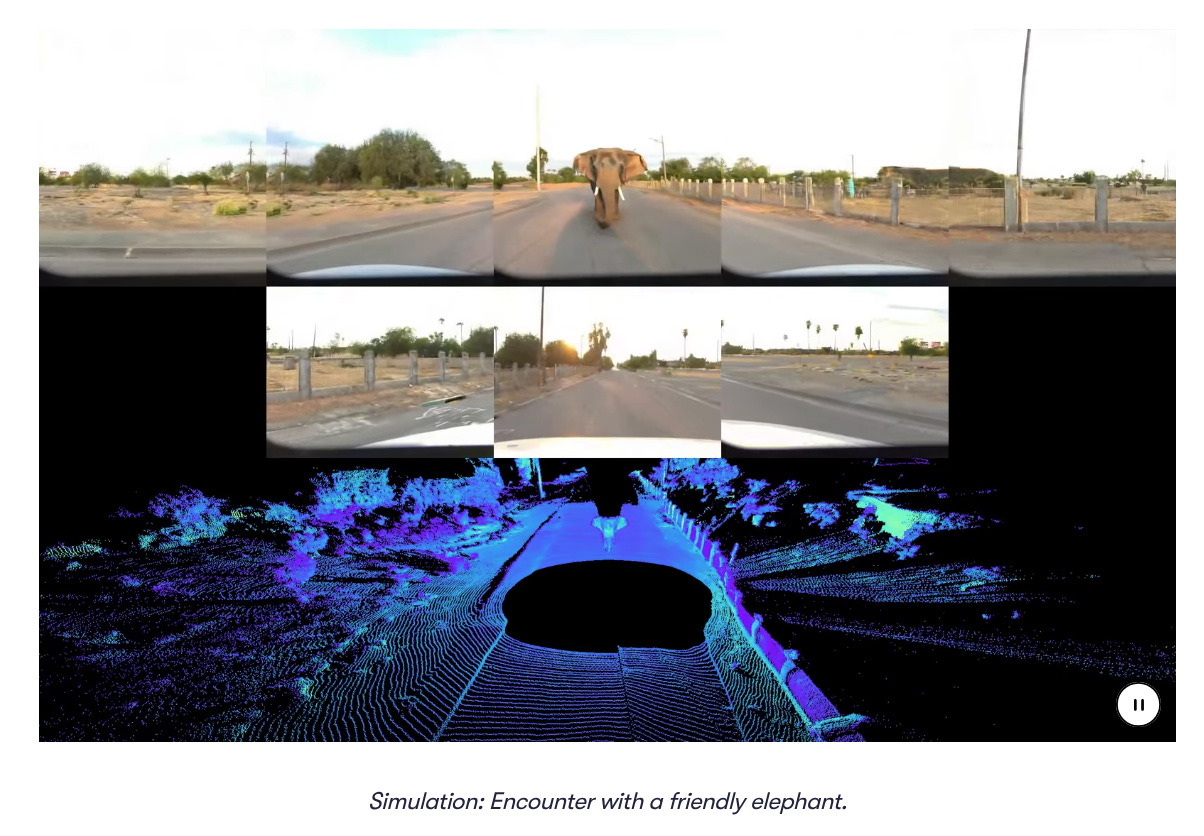

The Waymo World Model is built upon Genie 3—Google DeepMind’s most advanced general-purpose world model that generates photorealistic and interactive 3D environments—and is adapted for the rigors of the driving domain. By leveraging Genie’s immense world knowledge, it can simulate exceedingly rare events—from a tornado to a casual encounter with an elephant—that are almost impossible to capture at scale in reality. The model’s architecture offers high controllability, allowing our engineers to modify simulations with simple language prompts, driving inputs, and scene layouts. Notably, the Waymo World Model generates high-fidelity, multi-sensor outputs that include both camera and lidar data…

Most simulation models in the autonomous driving industry are trained from scratch based on only the on-road data they collect. That approach means the system only learns from limited experience. Genie 3’s strong world knowledge, gained from its pre-training on an extremely large and diverse set of videos, allows us to explore situations that were never directly observed by our fleet.

Waymo — and most self driving car companies — have to deal with a catch 22. They can’t drive in conditions they have never seen before, but can’t get data from those conditions if they don’t drive there. Waymo can’t drive in the snow, so it can’t collect data about snow conditions, so it can’t drive in the snow. Simulation solves this problem. A high quality world model lets you simulate any long tail event you want, from crazy weather to bad drivers to elephants on the road.

I think this is the first time a video-based world model was deployed for something other than video generation. A big milestone! I am very bullish about world models in general. I think they are much more valuable than just doing more RL. RL is expensive and is worse than supervised learning in basically every way. World models represent a unique capability that the models can lean on to reason better, that no amount of extra data in pretraining can really improve.

Also, another point in favor of my long-standing belief that Google is just going to win the AI race’. There just aren’t that many competing world models out there.4

SpaceX buys Twitter

SpaceX recently merged with xAI. xAI recently (but less recently) merged with Twitter. So now SpaceX, xAI, and Twitter are all the same company.

It was maybe kind of reasonable when xAI bought Twitter. You could squint and think that, yea, sure, AI needs training data and Twitter has training data so maybe xAI buys Twitter for training data, whatever.

It is…demonstrably less reasonable that SpaceX needs xAI? Elon is going on about data centers in space, but a lot of people much smarter than I have pointed out that that is very silly. And more generally it feels a bit odd that SpaceX would be buying xAI to make xAI better, instead of buying companies that make SpaceX better. Matt Levine jokes:

Chatbots today, intergalactic colonization tomorrow. In 3026 our nth-great-grandchildren will encounter a race of intelligent aliens in a distant galaxy, and the aliens will be like “how did you get here,” and our descendants will say “well it started with some slop feeds” and the aliens will be like “what.”

But, ok, sure, space data centers, whatever.

It is absolutely insane to think that SpaceX needs Twitter. Like, what could possibly be the rationale? SpaceX is bringing down the cost of launching matter into space, so it needs the world’s worst social network / CSAM generator to come along for the ride?

More than one person has pointed out that there are a lot of ulterior motives potentially at play.

Musk purchased Twitter with tons of debt, immediately removed a ton of safeguards against basically any content, and then was shocked that advertisers left the platform in droves. Last I heard, revenue was down 40% from pre-purchase. But it is worth noting: it’s not Musk that has the debt. It’s Twitter. In a leveraged buyout, the debt gets put on the acquired company’s books. So Twitter still has to pay interest on the debt. By merging Twitter with xAI in 2022, all of that debt got transferred to the AI company. And now, by merging with SpaceX, all the debt gets transferred to the rocket company.

This is a crazy amount of self dealing, and I think it’s fair to say that no one else in the world would be able to get away with it. In any sane world, Twitter would have died a financial death. The merger with Twitter was obviously bad for xAI’s shareholders, and is bad for SpaceX’s shareholders. Most of the time, that alone would be enough to sink a deal.

But the shareholders need to sue or otherwise claim damages. And the reality is, a lot of the shareholders of xAI and SpaceX are the same people who owned Twitter anyway! It’s all the same people!

Matt Levine has previously written about the Blackrock theory of governance. The basic idea is that because Blackrock (and equivalents) own basically every company in the world, they care more about the success of their overall portfolio than the success of any particular business. So, for e.g., during COVID, these massive institutional shareholders all demanded that Pfizer make the COVID vaccines available for cheap. Why? Because even though Pfizer individually stood to make tons and tons of money by withholding the vaccines, the broader Blackrock portfolio does worse when the world economy is shut down.

Almost 30% of Pfizer Inc.’s stock is held by Vanguard Group, BlackRock Inc., State Street Corp., Capital Group Cos. and Wellington Management Group. All of those are giant institutional investment firms that own shares of hundreds or thousands of companies, and those are just Pfizer’s biggest holders; lots of investors lower down the list are also huge diversified institutions. If Pfizer finds a coronavirus vaccine and distributes it as widely as possible—even at cost, even below cost, even for free, even at an enormous loss—it will make its owners richer by many many billions of dollars. BlackRock, for instance, owns about $16 billion of Pfizer stock. If Pfizer went to zero—if it bankrupted itself, selflessly producing and distributing vaccines—BlackRock (really its clients) would lose $16 billion. BlackRock owns about $2.9 trillion of other stocks; if a coronavirus vaccine allowed businesses to reopen and normal economic life to resume, and as a result those other stocks went up by 1 percent, that would more than make up for bankrupting Pfizer.

For BlackRock, I mean. BlackRock would be happy with that tradeoff, as would its clients, as would Vanguard and State Street and, in all likelihood, a majority of Pfizer’s shareholders, many of whom are diversified investors who own a lot of companies that aren’t Pfizer and are struggling.

I think the same general thing is true here. All of the shareholders in one Musk-verse company also own all of the other Musk-verse companies. So, broadly, they care more about whether the overall Musk-verse is doing well, rather than any particular Musk stock. Buying Twitter with tons of debt is a terrible idea if you’re a random investor. But buying Twitter with tons of debt and then hedging by buying SpaceX is a great idea! Musk used Twitter to get close with the Trump admin, and then got the Trump admin to pump billions of dollars into SpaceX through government contracts. Probably all of the Twitter investors were fine with this trade, because they all made more money on SpaceX than they lost on Twitter.

If not, please DM me, because how did you end up in this corner of the internet?

for right now at least

And if you have, and your name is Buzz or David or Charles or Harrison, how did you end up in this corner of the internet?

Off the top of my head, OpenAI’s Sora, Tesla’s internal world model, and I think NVIDIA has one. Also Fei-Fei Li’s startup.

A point Lemoine made that I take seriously is that we're self-interested and unreliable judges of consciousness in others. Lacking an objective way, we decide whichever way benefits us. This gives AIs the same plight as every oppressed human ever. Lemoine didn't make his arguments in the best way possible, but the degree of ridicule leveled at him is fairly strong evidence that this point of his is right. I strongly don't want to be a slave owner.

There’s a reason Yudkovsky et al use the term “optimizer”: it doesn’t have to have consciousness, or two legs and no feathers, or whatever. The only property a dangerous agent needs in order to cause trouble is the ability to somehow solve problems in the “real” world (including “virtual” things like bank accounts)… and we are getting close.