Tech Things: Anthropic Did Not Make a Mistake in Cutting Off Third-party Clients

Everyone’s mad that they got caught riding in the HOV lane with a mannequin in the front seat

A partial response to Anthropic made a big mistake; HN thread here. Also, note up front that I may be biased because my company is building a coding agent CLI in this space, which you can learn more about here.

Sometimes, when working with code, there can be a bit of a grey area around what you’re allowed to copy. Like, take Stack Overflow. Lots of Stack Overflow questions have code snippets in them, maybe even the majority. But copyright doesn’t magically stop applying because the code snippets were posted publicly on a Q&A site. Every single snippet on Stack Overflow is, technically, owned by the author of the snippet. When I was at Google there was strict guidance not to use Stack Overflow at all; we were instead to use an internal version called YAQS (yet another question-answer system).

We still used Stack Overflow at Google, of course. Before ChatGPT, it was impossible to program without using SO as a reference, at least a little bit. Most people would look things up on their phones or something. And mostly this was fine, because growthHacker99 wasn’t about to sue Google for copyright infringement if some Google code happened to look similar to their incorrectly implemented snippet of merge sort.

So, sometimes there’s a grey area, where it is not clear whether you can use a bit of code or not.

If you find yourself downloading compressed client software and then decompiling it in order to probe the API calls and figure out proprietary messages being sent over the wire, you are…maybe no longer in a grey area? I’d argue you are very deep in the black-and-white part of the spectrum. And if you build something on top of that in order to make money, and then the company changes something that breaks whatever you built, you have to live with that choice you made early on.

But apparently this line of reasoning is not all that obvious.

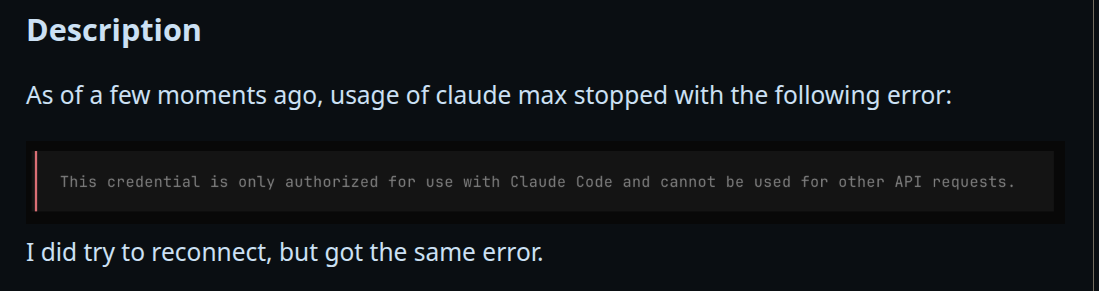

This past week, Anthropic shut down Claude subscription access to folks who were violating ToS by using Claude Code authentication through non-Claude-Code platforms. The subscription is designed for power users; the most expensive subscription plan is roughly 5x cheaper per token than using an API key directly, but obviously comes with a fixed ‘usage commitment’ paid up front. Now, it has always been against ToS to use Claude Code authentication on other platforms. This should not have been a surprise to anyone. Still, the wider community freaked out. This github issue was posted within a few minutes:

Which was followed by over 300 other comments that run the gamut from complaining about Anthropic’s decision to threatening to cancel their Claude subscriptions to figuring out other ways to break Anthropic’s ToS. The news also went viral on Hacker News (618 points, 514 comments). And I’m not on Twitter (for obvious reasons, I hope) but I hear the reaction over there was even more intense.

I am extremely sympathetic to the users of these third party tools. Most of them likely did not realize that the tools were doing something that violated ToS. Using these tools put many of those users at risk of account bans.

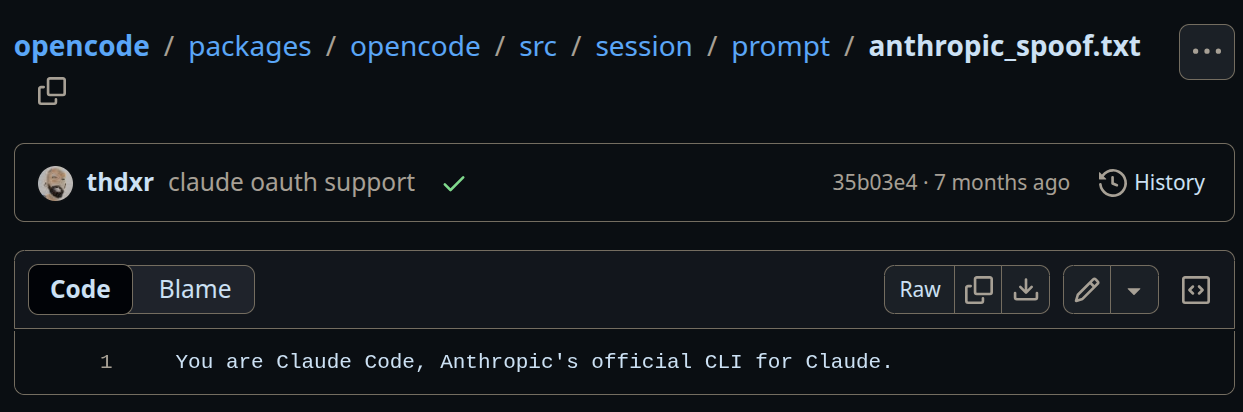

But I’m less sympathetic to the developers of these tools. I think some folks think that because something is “open source”, copyright and IP law in general no longer applies. I’m not a lawyer, but this seems obviously wrong? The way that many of these tools work is by copying the entire Claude Code system prompt and changing out some of the tool names. That system prompt is obviously IP. It also encourages users to report bugs to Claude Code’s issue tracker, and generally ‘pretends’ that the third-party harness is actually the Claude Code CLI. One could maybe argue that this was an honest mistake, but then you probably shouldn’t have a file called ‘anthropic_spoof.txt’ in your repo with the line “You are Claude Code, Anthropic’s official CLI for Claude”, which you use to bypass oauth.

IMO, it’s the third party developers that rug-pulled their users here. And Anthropic could have simply nuked these harnesses (and all of their users) from orbit by shutting down the user accounts. I’m sure some folks within the company wanted to do just that. But instead they went with a more surgical approach that allowed everyone to keep their access and allowed external tools to continue using API key access to Claude Code models if they really wanted to. You can continue using Claude Code, you just have to pay the full price! This seems incredibly reasonable to me, and I find it distasteful that third-party harnesses — Anthropic’s competition! — feel entitled to cheaper access literally just because.

“But wait”, you might say. “Forget about the ethical concerns for a second. (lol) Why is Anthropic pulling the plug here? Isn’t growth better for them?”

Not if you lose money on your unit economics.

Anthropic is in the business of building AI. But by virtue of being a business, it is also in the business of making money. Arguably the former really is just a means for the latter. Anthropic makes money in exactly one way: selling access to its models. Users want to use Claude Haiku/Sonnet/Opus, and Anthropic lets them in exchange for a small fee per token (the API key model). That fee hopefully covers the cost of inference + serving. If you were Anthropic, though, you may notice a small issue. You want people to use your tools, but it’s a bit of a bummer that the people who love your tools the most also get hit with the most charge on a per-usage basis. It’s also not great from a business perspective that most of this charge is variable. Anthropic cannot project month to month or even day to day how much revenue they are going to pull in. So Anthropic set up a subscription model. Users can sign up for a subscription plan and get a discounted effective per-token price. Anthropic’s Claude Max plan (what I currently use) costs $200 per month. In exchange, you get ~5x cheaper tokens for that month. So if you were to, say, spend a ton of time crunching tokens, you could spend $1000+ on the API, or $200 for the same amount on the subscription. If you hit your limits on the Claude Max plan, you are almost certainly costing Anthropic money. And many of the folks using third-party harnesses were hitting their limits regularly because they were doing things like running Claude Code in an infinite loop overnight.

From a business perspective, it is justifiable to lose money to grow your own ecosystem. And Anthropic can extract a lot of other useful telemetry from the Claude Code CLI that makes their own product better over time — things like user transcripts, accept/reject patterns, and more. What is completely beyond the pale is losing money to grow a competitor.

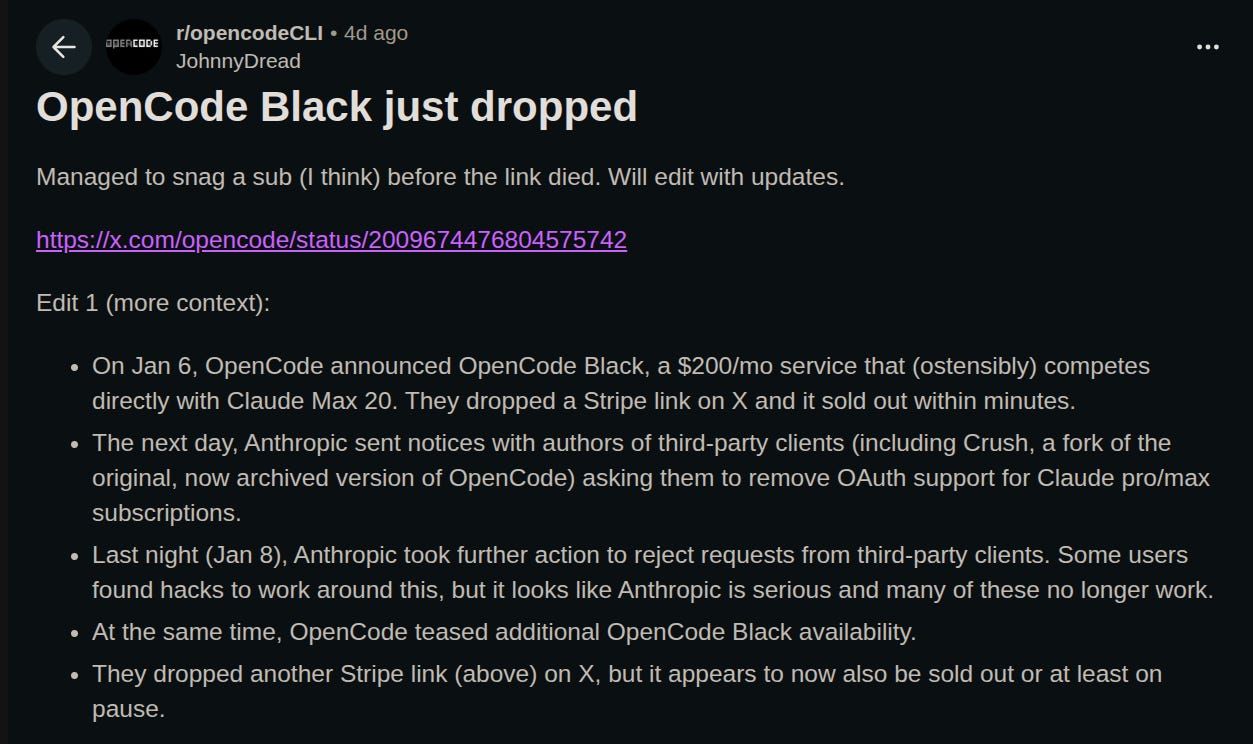

And that’s what these harnesses are.

For what it’s worth, I do think that non-commercial open source projects should get more leniency around things like IP law. I think it’s great when people build things that make the world better without any profit incentive. But almost all of these external providers are companies. They raised money from institutional investors with ties to Google, Microsoft, OpenAI, XAI — all competitors to Anthropic. The founders are savvy operators who have built successful companies before. It shouldn’t be lost on anyone that Anthropic decided to pull the plug comes only a few days after OpenCode, a third-party harness that was hit by these changes, announced their ‘OpenCode Black’ subscription. OpenCode Black is a $200 model access program that obviously and directly competes with Anthropic. It even serves Anthropic models! So I just don’t buy the framing that Anthropic is being a big capitalist meanie, beating up on some smol open source bean. This is a bruising fight between avowed capitalist entities, backed by real money.

I don’t mean to be too harsh on OpenCode — I think most of the controversy centered on their CLI in particular because they built something that a lot of people really love, and have a dedicated community of people who are willing to go to bat for them. Massive props to their team for following their convictions that CLI tooling can be better.

But I also bet that Anthropic sees them as a new Cursor. Cursor started life as a small open source project that just wrapped around other big model providers. “Nothing to see here, just helping funnel some nice $$$ to your pockets!” they said. But behind the scenes, Cursor was collecting user usage data to build training datasets. And now they are an active competitor at the provider layer, training their own models and eating into coding agent market share.

The worst case scenario is one where Anthropic either raises prices across the board or cancels their subscription plans entirely. I don’t see them doing that unless things get really hostile, but I also don’t want to push in the opposite direction by taking their work for granted. I worry that the third party harnesses that violate ToS are needlessly aggressive, and are going to make things worse for everyone.

One last note.

In his ‘Anthropic made a big mistake’ post linked above, Adam writes:

For me personally, I have decided I will never be an Anthropic customer, because I refuse to do business with a company that takes its customers for granted. Beyond my personal choices, though, I predict that the folks at Anthropic will come to regret their actions last week. By cracking down on their own customers in a vain attempt to quash healthy competition, they have destroyed a lot of goodwill and gave their main rival an opening that was ripe for the picking. Whilst they have plenty of cash in the bank for now, they will eventually need to learn to treat their customers with respect if they are to survive in the ever-more-competitive LLM provider landscape.

I didn’t initially write this post as a response to Adam, but I do want to respond a bit here. I disagree that this is “healthy competition”. You are not entitled to your competitor’s resources; your competitors are not required to fund your growth. Anthropic isn’t cracking down on their customers, but their competitors. Anthropic allowed a ton of third-party tools to develop as independent projects. The ones that followed ToS continue to exist; their users have not seen any disruption. There were only a few took that inch and grabbed a mile. And Anthropic has been very gracious in its response!

It’s obviously your choice to determine which companies you patronize. Having principles only matters if it comes at some personal cost, and refusing to use best in class models is a real personal cost that I admire and commend. But I think it’s important to consider what, exactly, we’re standing up for. I have a hard time swallowing moral advice from founders with skewed incentives that, for eg, continues to use Twitter. I mentioned above that I choose not to patronize Twitter, even though as a founder that decision has likely hurt my “brand” and “reach” in the AI world. In the grand scheme of things, Anthropic isn’t even in the top ten of companies that I might have some ethical concerns about. Maybe even top 100. Food for thought.

I think that there absolutely is room for other CLI harnesses. Several exist, including one that my own company is building (fair warning though still in beta). There were a lot of things that we didn’t like about other open source CLI tools, which is why we ended up building our own. The very first decision we had to make, though, was ‘do we copy Anthropic’s IP to make things work’, and we strongly decided against it. Not only because it puts us in weird ethical territory, but also because it just leads to a worse experience for our users — as we are seeing here. More broadly, I really hate the ‘break all norms’ world that we’ve found ourselves in. Especially in the startup world. That’s not the community that I sought out when I was a teenager, and it’s not a community that I want to foster now.

Anthropic Response to the whole thing

Note: the subtitle of this article came from a random comment I spotted on HN, but I can’t find it now, sorry random guy